A day on crypto Twitternet feels like a week. Hence, I figured there's enough information for a blog series that will capture those days (weeks?) and perhaps provide insights for those missing from the dialogue. It's also an excuse to rejuvenate my blog - a place where I don't need to stick to 140 characters and on-topic banter. So here we go!

The day is Friday, and its beginning is nicely captured by @Loopify

Now before you run off to buy a year's supply of the fermented vegetable, let me clarify what Loopify is referring to. "My Fucking Pickle", pardon my French, is a name of an NFT collection of 10'000 unique NFTs that will resemble a pickle, each with its unique traits. It started as a joke but quickly escalated to being released for purchase. Now I say "will" because the owners actually won't know what type of pickle they're getting (what traits the pickle will have) until a specific date next week.

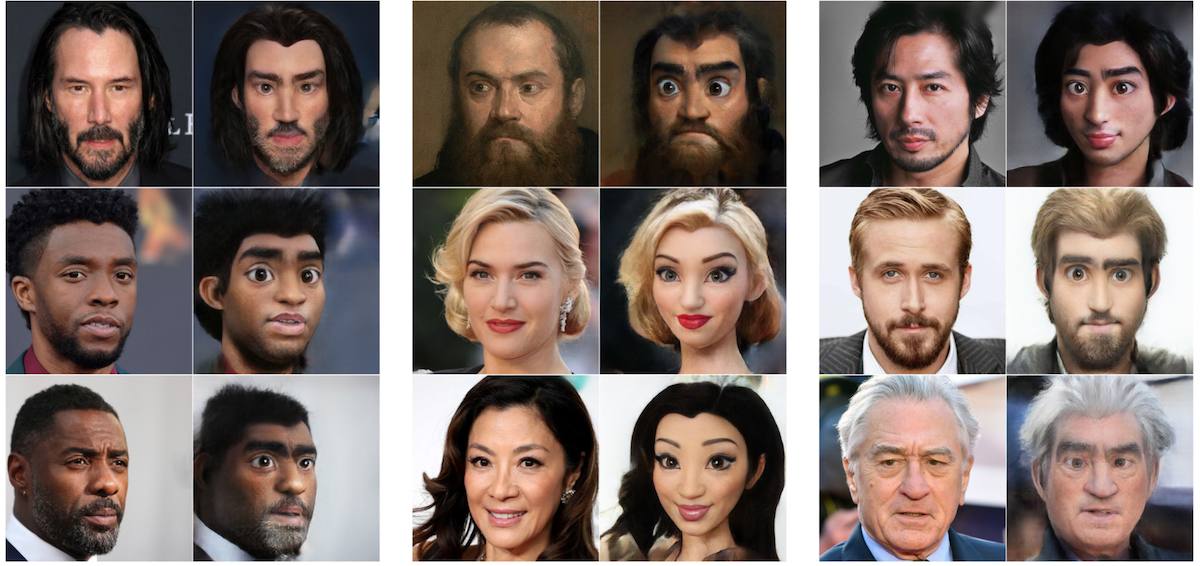

The project follows the example of other so-called generative collectible series like Cryptopunks, Bored Apes or Hashmasks, minus the fact that the execution time to bring it to life happened within a week - as opposed to others, some of which would take months in the planning. It will be interesting to see how this low-effort spin-off that doesn't make any promises will perform on the market. Since its inception, Fucking Pickles already tripled in price overnight.

Lisa Odette's Lady_008_Totem

Next up, it was hard to miss some quality art exchange under the #HENThousand tag influenced by a ten thousand work edition released for 1 tez by John Karel. The initiative provided an opportunity to snap artworks by one's favourite artists for a fraction of the price with the remaining copies being burned by the artists in 24 hours. Some artworks minted include Von Doyle's morphed painting Andromeda, DALEK's 10000 spacemonkeys, Lisa Odette's Lady_008_Totem, and Marcus’ ‘Simpler Times’ to name a few.

Von Doyle's Andromeda

And finally, we wrap up our Friday with "Curation in the NFT space", a weekly Twitter live talk with @VerticalCrypto, @colbornbell, @sambrukhman, @flakoubay and today’s special guest @martjpg. We discussed NFT collection building, latest creative approaches to curating crypto art, the opening of the Crypto and Digital Art Fair (CADAF), experimental approaches to selecting artworks, buying them, marketplaces, generative collectibles, and more! You can tune in next Friday to hear more ;)

Oh, and follow me on Twitter: @aljaparis

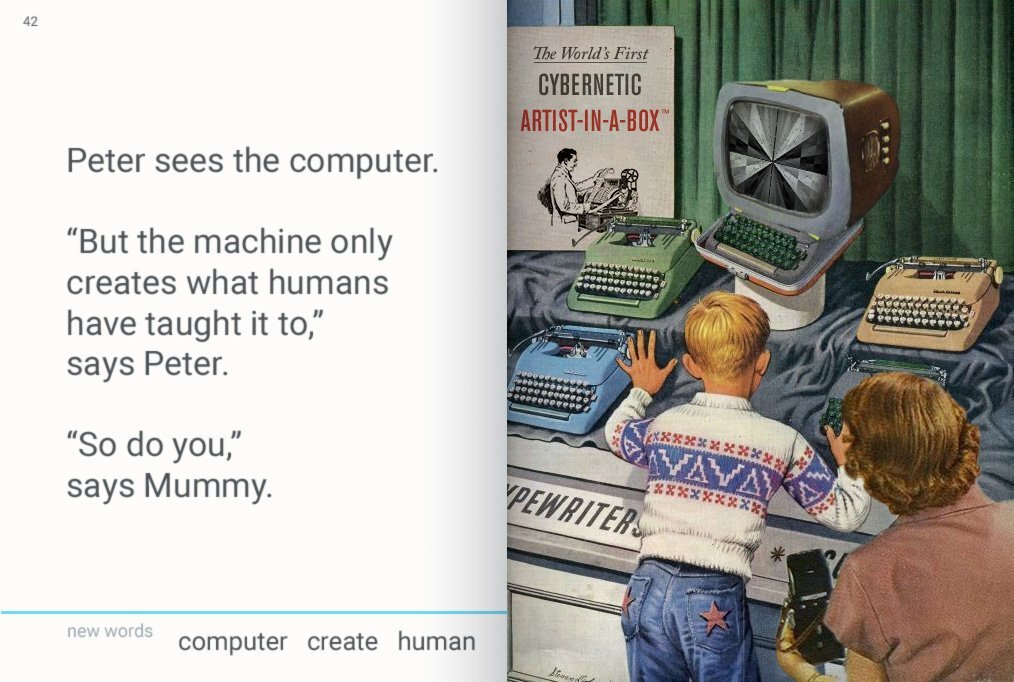

Cover image by Marcus titled ‘Simpler Times’